#19 - It's been a while!

Now we're back, celebrating 21K+ subscribers with a lot of other cool updates on the way 🎉

Welcome to 19th issue of AI Agents Simplified. This issue is presented to you by Tabsy

📰 What happened while we were at vacation?

Gemma 3n Is Here: Google’s Most Capable On-Device Model Yet

The Gemma ecosystem has hit 160M downloads, and now Google is taking it further with the full release of Gemma 3n, a mobile-first, multimodal LLM built for real on-device use.

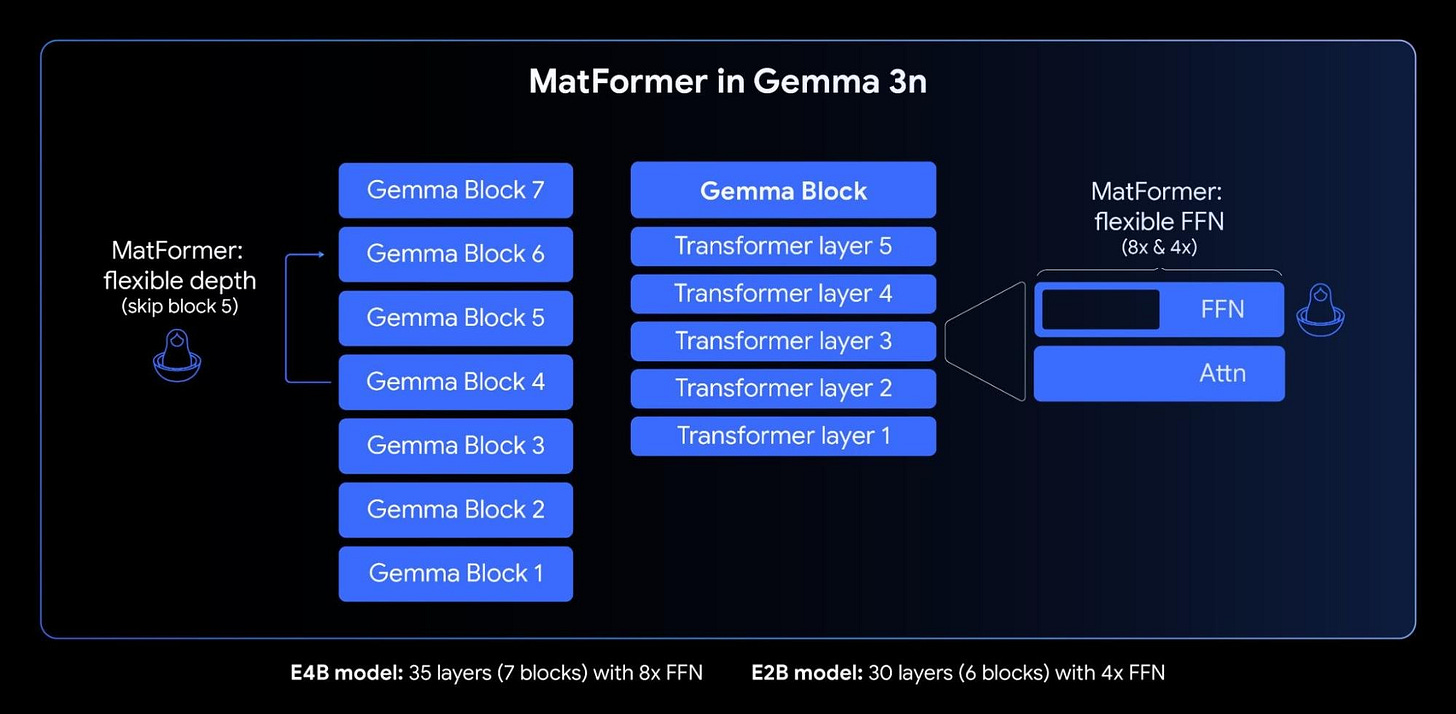

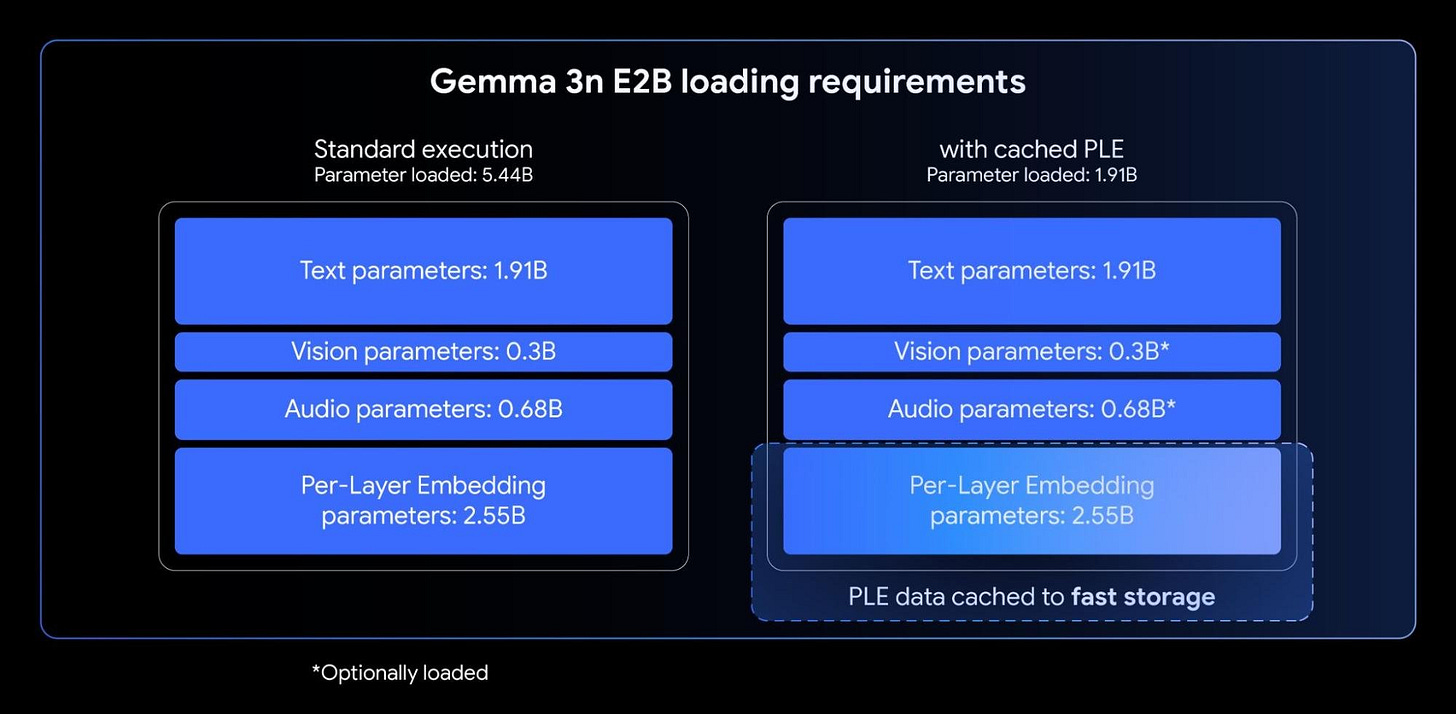

Gemma 3n comes in two sizes: E2B (2GB memory) and E4B (3GB memory), with actual parameter counts of 5B and 8B. But thanks to smart architecture, they behave like 2B and 4B models in terms of footprint.

What’s new:

Multimodal from the start: Text, image, audio, and video inputs built-in.

MatFormer: A “Matryoshka Transformer” architecture nested models in one for elastic inference.

Per-Layer Embeddings (PLE): Better performance without extra memory cost.

KV Cache Sharing: Faster responses for long-context, streaming use.

New encoders: Uses USM for audio (6 tokens/sec) and MobileNet-V5 for visionboth optimized for edge.

Gemma 3n hits LMArena >1300 with just 8B params first to do so under 10B. And it’s already supported on Hugging Face, llama.cpp, Ollama, MLX, and more.

It’s powerful, multilingual (140+), and genuinely built for devs shipping real products locally.

It’s open source on HuggingFace so you can check it out:

Or, you can try it in here:

🤖 Siri, But If It Actually Worked

This issue is sponsored by Tabsy. The AI tool that quietly does what Siri, Spotlight, and 10 billion dollars of Apple R&D still can’t: help you actually find stuff.

It searches your local files by meaning; not just filenames or keywords. You can ask it things like:

“What did I say about budget approvals last quarter?”

“Where’s that insurance document with the updated address?”

“What’s my flight number again?”

It’s local. It’s fast. It’s spooky smart.

Also, it doesn’t randomly say “I found this on the web.” 🙄

🔮 Cool AI Tools & Repositories

LibreChat - Enhanced ChatGPT Clone: Brings together the future of assistant AIs with the revolutionary technology of OpenAI's ChatGPT.

📃 Paper of the Week

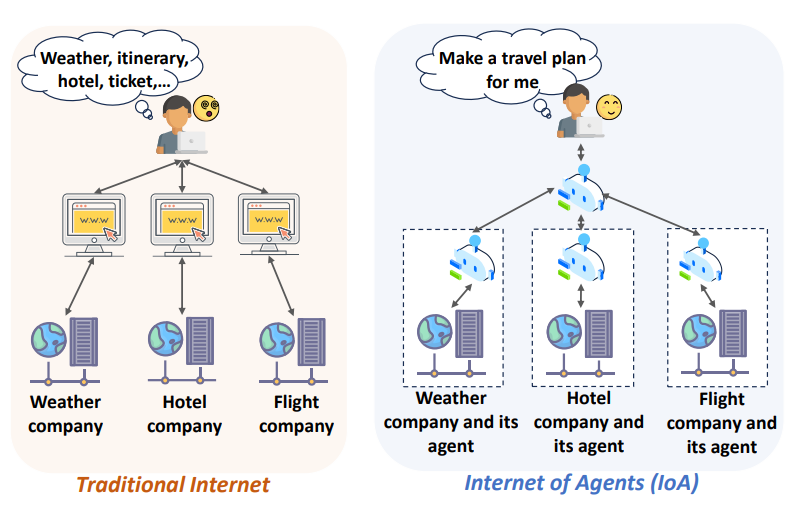

Standardized Protocols for Agent Communication: The paper outlines the rise of protocols like Model Context Protocol (MCP), Agent-to-Agent (A2A), and Agent Communication Protocol (ACP-IBM), which formalize how AI agents interact with tools, other agents, and environments enabling coordination, interoperability, and scalability in multi-agent systems.

Core Agent Architecture: AI agents are described as modular systems with five key components Perception, Memory, Tools, Reasoning, and Action allowing them to sense, recall, integrate external resources, plan, and execute across specialized tasks.

Security Threat Landscape: The paper categorizes attack surfaces across three vectors: User-Agent (U-A) (e.g., adversarial audio, psychological profiling), Agent-Agent (A-A) (e.g., spoofing, capability poisoning), and Agent-Environment (A-E) (e.g., command injection, RAG poisoning).

Defense Mechanisms: Proposed defenses include sandboxing, zero-trust registration, semantic anomaly detection (e.g., TrustRAG), orchestration monitoring (e.g., GuardAgent), and system-level mediation to prevent model misuse, poisoning, and covert task execution.

Call for Open Standards and Auditable Infrastructure: The authors highlight limitations in closed agent ecosystems and recommend open, cross-protocol standards, real-time supervision, and blockchain-based storage to ensure security, transparency, and auditability especially for high-stakes domains like finance or healthcare.

Glad to see you're back guys. Keep it up. 😍

Thanks for replying