#20 - ChatGPT Agent IS HERE!!

ChatGPT can now think, plan, browse, run code, and complete tasks, not just respond to prompts!

Welcome to 20th issue of AI Agents Simplified. This issue is presented to you by Tabsy

📰 What Happened in the Last Week?

OpenAI has made one of its biggest moves yet. ChatGPT can now think, plan, browse, run code, and complete tasks, not just respond to prompts. The new agentic system is here, and it’s built to work like an actual assistant not a chatbot pretending to be one.

Here’s what changed:

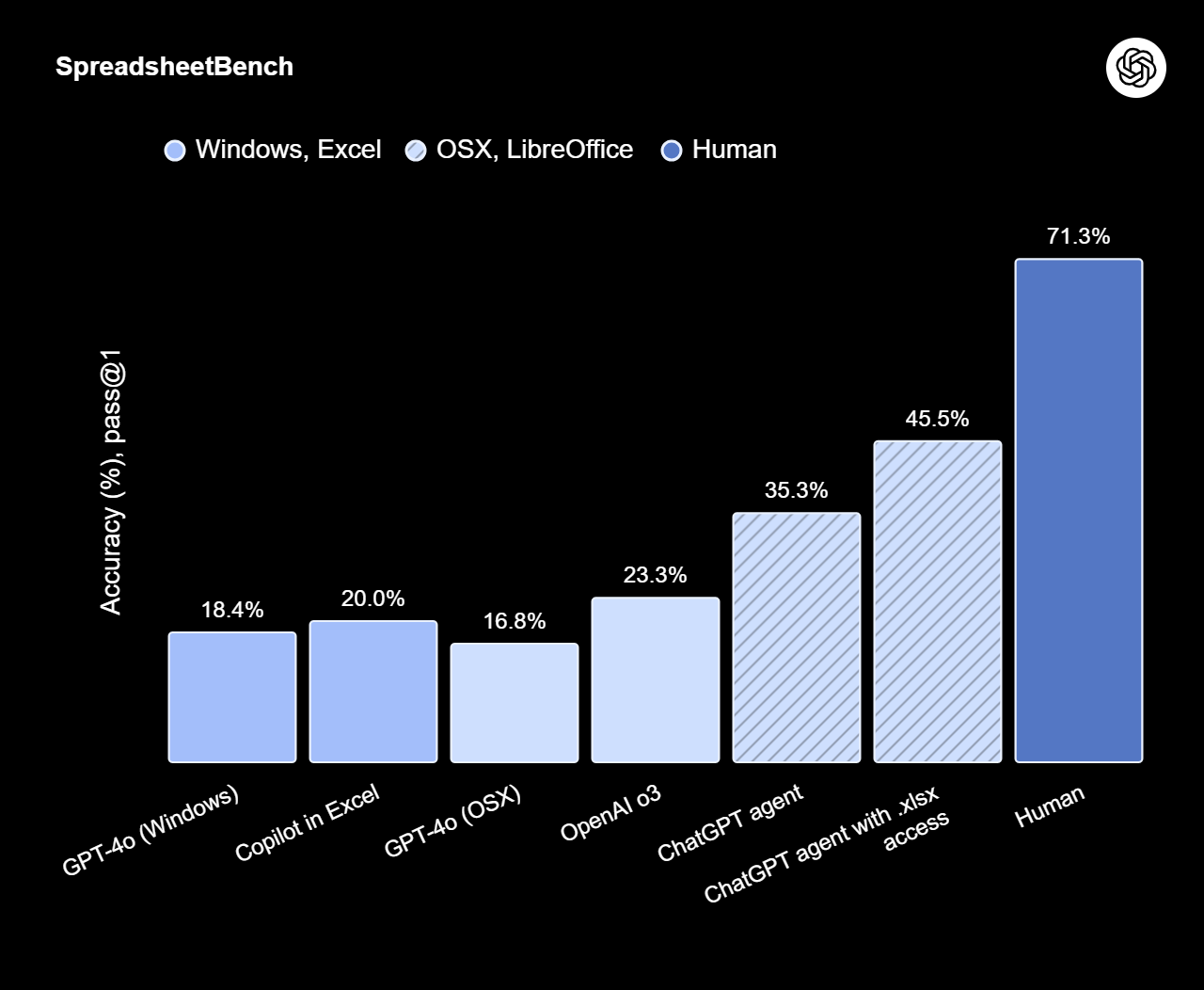

ChatGPT now operates using its own virtual machine. That means when you ask it to do something complex, it doesn’t just return a list of ideas or links, it acts. It can browse websites, click buttons, fill out forms, write and run code, extract data, use APIs, manipulate spreadsheets or slideshows, and even deliver completed tasks structured, editable, and usable.

You can now say things like:

“Look at my calendar and brief me on upcoming client meetings using the latest news about those companies.”

“Plan and purchase ingredients for a Japanese breakfast for four.”

“Analyze these three competitors and prepare a slide deck for our next investor update.”

And ChatGPT will not only handle the research and reasoning but execute the steps to get there, navigating the web, securely logging into platforms, filtering and selecting results, and returning structured output like a polished presentation or a working spreadsheet.

This new mode is powered by a unified architecture that merges the strengths of past features Operator (for interacting with web interfaces), Deep Research (for deep synthesis and summarization), and ChatGPT’s conversational reasoning. You can toggle on Agent Mode from the tools dropdown in any conversation. Once active, the model chooses from a set of built-in tools including:

A visual web browser that can interact with real websites (click, scroll, input).

A text browser for lean, fast information retrieval.

A terminal for running code and handling automation.

API access and app connectors that let it fetch data from Gmail, GitHub, Notion, and more.

It also remembers its working context across tools. For example, it can download a PDF, extract and summarize content using the terminal, then switch to a visual browser to pull supporting data, and finally generate a slide deck all within a single task loop.

This launch marks the shift from passive language models to active agents that can coordinate and complete workflows. You’re still in full control: ChatGPT asks for permission before taking significant actions, and you can jump in, redirect, or stop the task at any time. It even supports recurring automations for example, generating weekly reports or summaries on a set schedule.

Access is rolling out to Pro, Plus, and Team users now. Pro users get 400 messages per month; others get 40, with optional credit-based extensions. Enterprise and Education plans will follow soon.

This is just the beginning. Right now, capabilities like slideshow generation are still in beta rough around the edges in formatting and polish but OpenAI says more powerful iterations are already in training.

Agent Mode is a major step toward turning ChatGPT into an actual AI worker. Not just a tool you chat with but one that gets work done, across apps, files, and web platforms, from start to finish.

If you’ve ever wished ChatGPT could go beyond ideas and into execution, this is it. The interface stays the same. The engine behind it is now agentic.

🤖 Siri, But If It Actually Worked

This issue is sponsored by Tabsy. The AI tool that quietly does what Siri, Spotlight, and 10 billion dollars of Apple R&D still can’t: help you actually find stuff.

It searches your local files by meaning; not just filenames or keywords. You can ask it things like:

“What did I say about budget approvals last quarter?”

“Where’s that insurance document with the updated address?”

“What’s my flight number again?”

It’s local. It’s fast. It’s spooky smart.

Also, it doesn’t randomly say “I found this on the web.” 🙄

📃 Paper of the Week

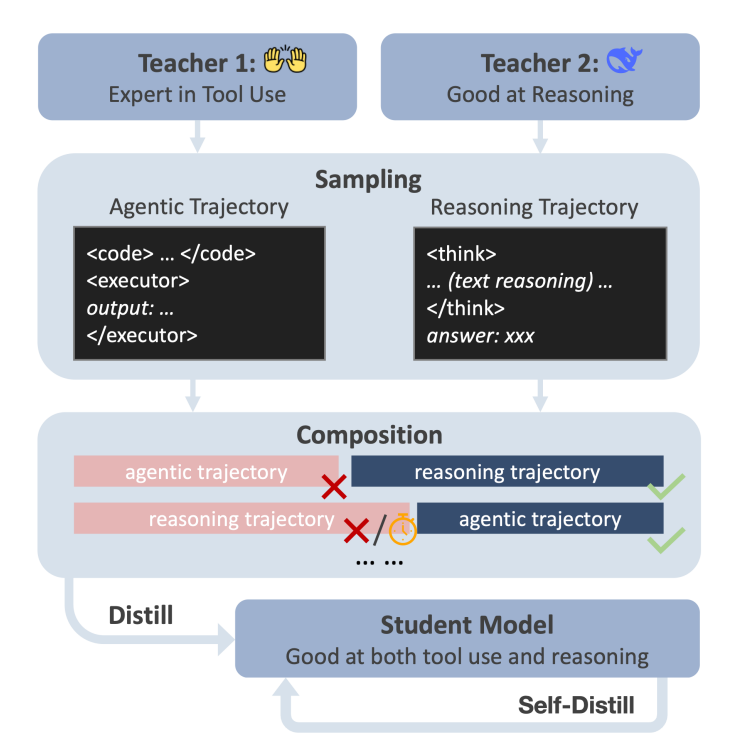

This week’s paper is “Agentic-R1: Distilled Dual-Strategy Reasoning” by Weihua Du and the team at Carnegie Mellon. It introduces Agentic-R1, a reasoning-optimized language model that merges two different thinking styles into one adaptive agent. The core idea? Combine chain-of-thought reasoning with tool-augmented computation and let the model pick which one to use based on the task.

Here’s what makes this work stand out:

DualDistill Framework: Agentic-R1 is trained using two strong teacher models OpenHands (for long-form reasoning) and DeepSeek-R1 (for tool use). The student learns both strategies and how to switch between them.

Hybrid Reasoning Strategy: It doesn’t just use CoT or tools blindly. Agentic-R1 dynamically selects the right strategy text reasoning for conceptual problems, tool-based for hard math. That flexibility leads to real gains in performance.

Self-Distillation: The student gets better over time by learning from its own mistakes, using feedback from the teachers to fix wrong answers. Especially useful for boosting smaller models.

Efficient Training Setup: Instead of massive datasets, they trained Agentic-R1 on ~2,000 distilled reasoning traces. Paired with a pre-trained student model (DeepSeekR1-Distill-7B), this makes it lean and practical.

Performance: It beats standard long-CoT models on tough reasoning and math tasks. The adaptive strategy helps avoid “overthinking” or using the wrong tools a common failure mode in today’s agents.

This work points to a smarter way to train reasoning agents, one that’s fast, efficient, and better at knowing when to think and when to act. Solid step forward for building AI that adapts its problem-solving strategy in real time.

++ Good Post, Also, start here Compilation of 100+ Most Asked System Design, ML System Design Case Studies and LLM System Design

https://open.substack.com/pub/naina0405/p/important-compilation-of-most-asked?r=14q3sp&utm_campaign=post&utm_medium=web&showWelcomeOnShare=false

Great breakdown! ChatGPT Agent moving from passive replies to actual execution feels like the real start of AI as a co-worker.