So yeah, over the weekend Meta dropped three new Llama 4 models, and just like every other company launching a new LLM these days, they’re out here saying, “We’re the best.”

But... are they really?

Let’s break it down.

🤖 What Even is Llama 4?

Here’s the short version:

Llama 4 Scout: 17B active parameters, 16 experts. It’s fast, fits on a single H100 GPU, and claims to outperform Gemma 3, Gemini 2.0 Flash-Lite, and Mistral 3.1.

Llama 4 Maverick: Same size, but with 128 experts. Supposedly beats GPT-4o and Gemini 2.0 Flash in multimodal stuff and matches DeepSeek v3 in reasoning/coding — all with fewer parameters.

Llama 4 Behemoth: The 288B giga-chad model still in training. Meta says it beats GPT-4.5, Claude 3 Sonnet, and Gemini 2.0 Pro in STEM benchmarks.

They’re open-weight, multimodal, mixture-of-experts (MoE) models, and you can already try Scout and Maverick on llama.com and Hugging Face. Meta’s also pushing Llama 4 into Messenger, WhatsApp, Instagram, and the Meta AI site.

Pre-training: Smarter Models, Smarter Approach

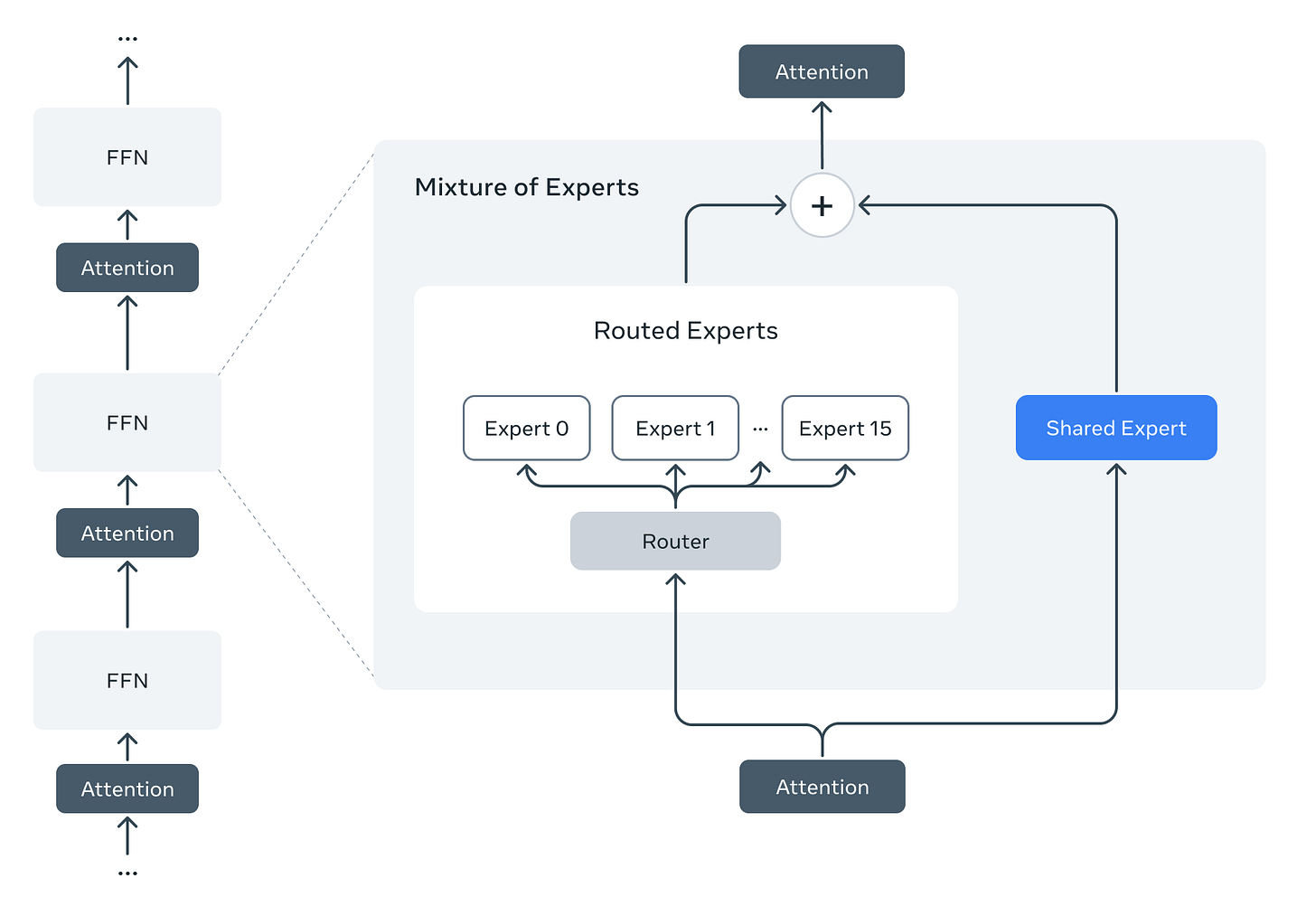

Llama 4 is Meta’s big leap. A smarter, cheaper, and way more efficient family of models. What’s cool is that they ditched the classic dense model idea and went with Mixture of Experts (MoE). Basically, not all parts of the model activate at once, just what’s needed. That means better results with less compute.

Llama 4 Maverick: 400B Params, 17B Active

Maverick uses a hybrid structure. Some layers are dense, some use MoE. It has 128 expert routes, but only a couple are used per token, making it crazy efficient. They even managed to run this beast on a single H100 DGX, which is wild.

Multimodality: Text + Vision Built In

They went with early fusion so the model sees images and text together from the start. That’s how it “gets” vision and language so well. The vision encoder’s based on MetaCLIP, but better tuned with a frozen Llama backbone.

MetaP + FP8 = Crazy Efficient Training

They trained with MetaP, which tunes learning rates and inits automatically. Also, they used FP8 precision and got 390 TFLOPs/GPU across 32K GPUs. Insane. Oh, and it’s multilingual, pre-trained with 10x more non-English data than Llama 3.

Long Contexts: 10M Tokens?!

Llama 4 Scout (the smaller sibling) hits 10 million tokens for context length. That’s… basically infinite. Perfect for codebases, multi-document tasks, or parsing large histories. They use a method called iRoPE, no positional embeddings, just interleaved attention + temperature scaling.

Post-training: Hard Prompts Only

Instead of overfitting with easy data, they filtered out 50% of the simple stuff (95% for Behemoth!) and fine-tuned only on harder examples using lightweight SFT > online RL > DPO. This let them keep reasoning, coding, and convo strong without overtraining.

Continuous Online RL: Always Getting Better

The model was trained, evaluated, and filtered on the fly using RL. They alternated training and filtering to keep only medium/hard prompts. This pipeline helped it learn fast without burning compute.

Behemoth: 2 Trillion Params of Raw Brain

Llama 4 Behemoth is the teacher model with 288B active, 2T total parameters, and was key in distilling the smaller models. They codistilled using a custom loss function and used RL with hand-picked hard prompts + adaptive batching.

Massive RL Infra Revamp

Training a 2T param model forced them to rebuild their RL infrastructure from scratch. They made it async, more parallel, and more memory-friendly. That gave them 10x speed gains over older setups.

Model Lineup TL;DR

Llama 4 Maverick: 400B total / 17B active | Best general-use + vision + chat

Llama 4 Scout: 109B total / 17B active | Best for long context (10M tokens!)

Llama 4 Behemoth: 2T total / 288B active | Teacher model, not for public use

Sounds impressive, right?

But that’s just the official line. Let’s get into the drama.

✒️ So... What’s the Reddit Crowd Saying?

One Redditor ran both Scout and Maverick through KCORES LLM Arena tests, and uh... they were not impressed.

Here’s the gist:

The reviewer does say maybe Llama 4 is still useful for multimodal stuff or long-context tasks, but for coding? Straight-up don’t bother.

🔍 And Even LMArena had to Step in

Things got so heated that LMArena (yeah, the leaderboard folks) dropped a statement too.

They admitted:

The Maverick model Meta submitted was a customized version tuned for human preference, something they should’ve disclosed.

They’re updating their policies now to make sure all models are tested fairly and transparently.

And yes, they’re adding the Hugging Face version of Llama 4 Maverick to Arena for a more honest eval.

So yeah... Meta may have flexed hard on paper, but in real-world use especially in coding, it’s looking kind of shaky right now.

🧠 Final Thoughts

Llama 4 might be strong in multimodal, long-context, or on-device scenarios. Maybe even better than most when it comes to memory efficiency and deployment.

But for devs looking for raw coding power?

Maverick and Scout ain’t it “at least not yet”.

Let’s see what Behemoth brings once it's done training. Until then, it’s probably safer to stick with the likes of DeepSeek, GPT-4o, or Claude if you're building serious dev tools.