In 2025, building AI agents isn't just about connecting a Large Language Model (LLM) to some tools and calling it a day.

If you want agents that actually work in real-world scenarios, with real-world users you need strategy, structure, and solid design principles.

Lucky for us, the amazing team at Anthropic dropped a full playbook on this called "Building Effective AI Agents", and here’s the simplified, practical breakdown you need 👇

First: What’s an “Effective” AI Agent?

Not every chatbot is an agent. Not every app using an LLM is an agent.

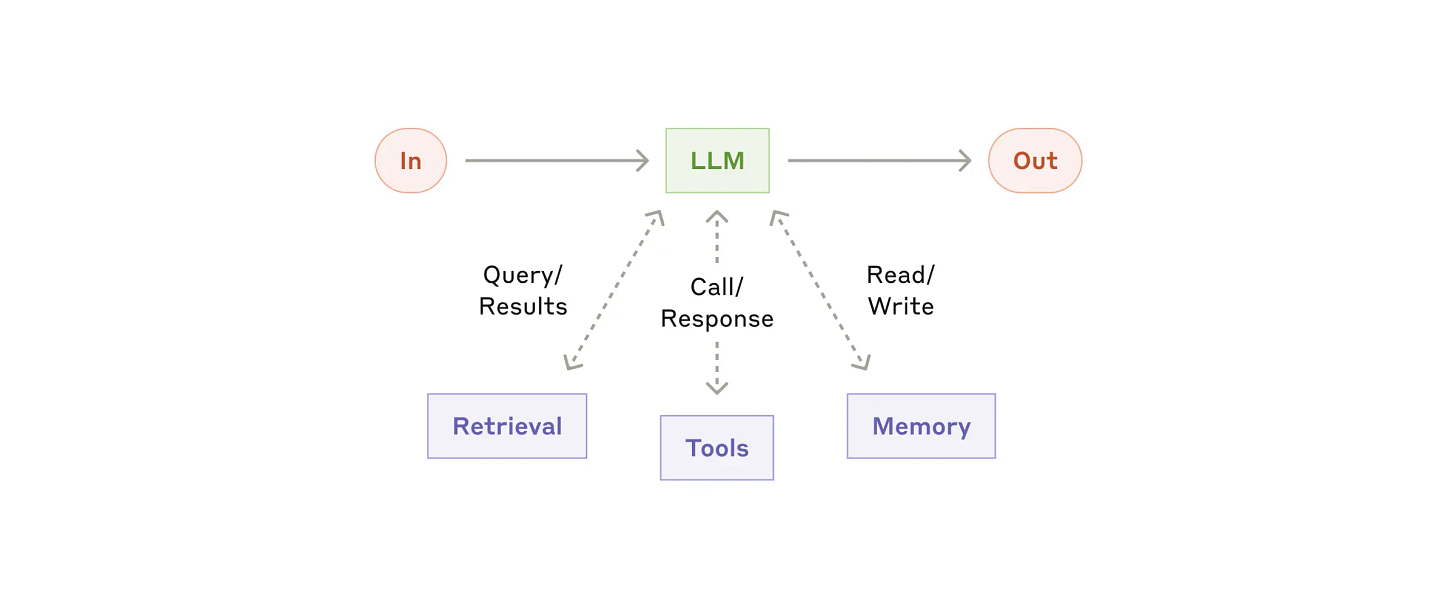

An effective AI agent has three key powers:

Autonomy: It can make decisions and act on behalf of the user without needing constant babysitting.

Memory: It remembers what happened before and uses it.

Tool Use: It can interact with APIs, databases, files, or apps to get things done.

Goal: Create agents that users can trust to complete real tasks not just give random paragraphs of text.

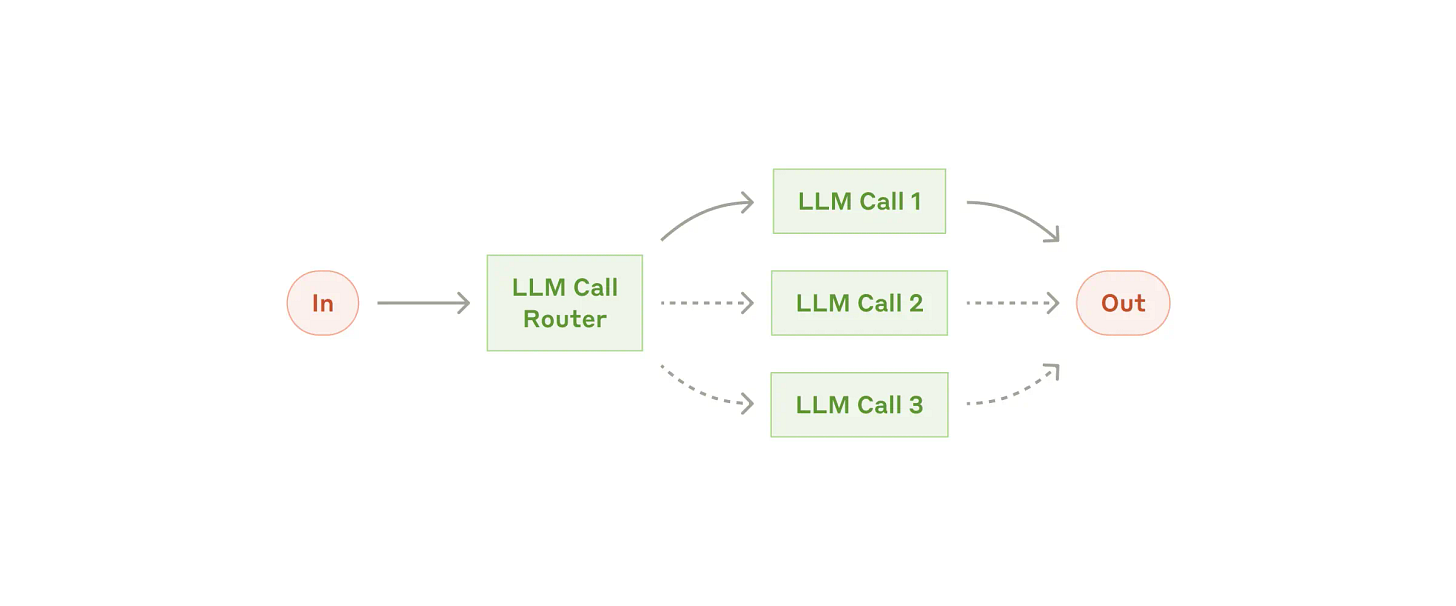

1. Start with a Narrow, Valuable Use Case

The first trap most people fall into? Building agents that are "too general."

In 2025, the best agents start narrow.

You want the agent to be laser-focused on solving one painful user problem.

How to Pick a Use Case:

Clear user need (people would want this automated)

Specific success criteria (it’s obvious if it worked or not)

Stable environment (the world the agent operates in doesn’t change every five seconds)

Examples:

A helpdesk agent that answers warranty questions.

A sales agent that fills out CRM records after meetings.

A content agent that drafts LinkedIn posts from meeting notes.

Start small. Master one thing. Then expand.

2. Define a Clear Agent Persona

Here’s the thing: Agents perform way better when they have a specific identity and instructions.

You don’t want a generic "assistant." You want:

"You are a Customer Support Agent for an e-commerce company, specializing in return policies."

"You are a Medical Research Assistant who summarizes journal articles for doctors."

Key elements of a good persona:

Role: What the agent "thinks" it is.

Goal: What success looks like.

Style: How the agent communicates (formal, casual, etc.).

Constraints: What the agent should never do.

Pro tip:

Use structured prompts, JSON schemas, and system messages to bake this identity deep into the agent’s brain.

3. Equip the Agent with the Right Tools

An agent without tools is just a smart parrot.

You need to give your agent abilities:

Read databases

Call APIs

Update records

Send emails

Search internal docs

Types of Tools to Integrate:

Retrieval Tools: Find documents, emails, tickets.

Action Tools: Create, update, delete stuff.

Computation Tools: Run calculations, code snippets.

Knowledge Graphs: to manage evolving structured knowledge.

Important:

Teach your agent when and why to use each tool.

Random tool spamming = terrible user experience.

4. Build and Use Structured Memory Systems

A smart agent needs memory, but memory needs structure.

There are two types:

Episodic Memory: What happened in this conversation/session.

Long-Term Knowledge: What the agent knows across time (facts, preferences, rules).

Modern Memory Techniques (2025 style):

Graph-based memory for handling dynamic data.

Context windows smartly managed with retrieval and summarization.

Session logs that persist and update intelligently.

Why it matters:

Without memory, agents repeat mistakes, forget instructions, or act randomly across sessions.

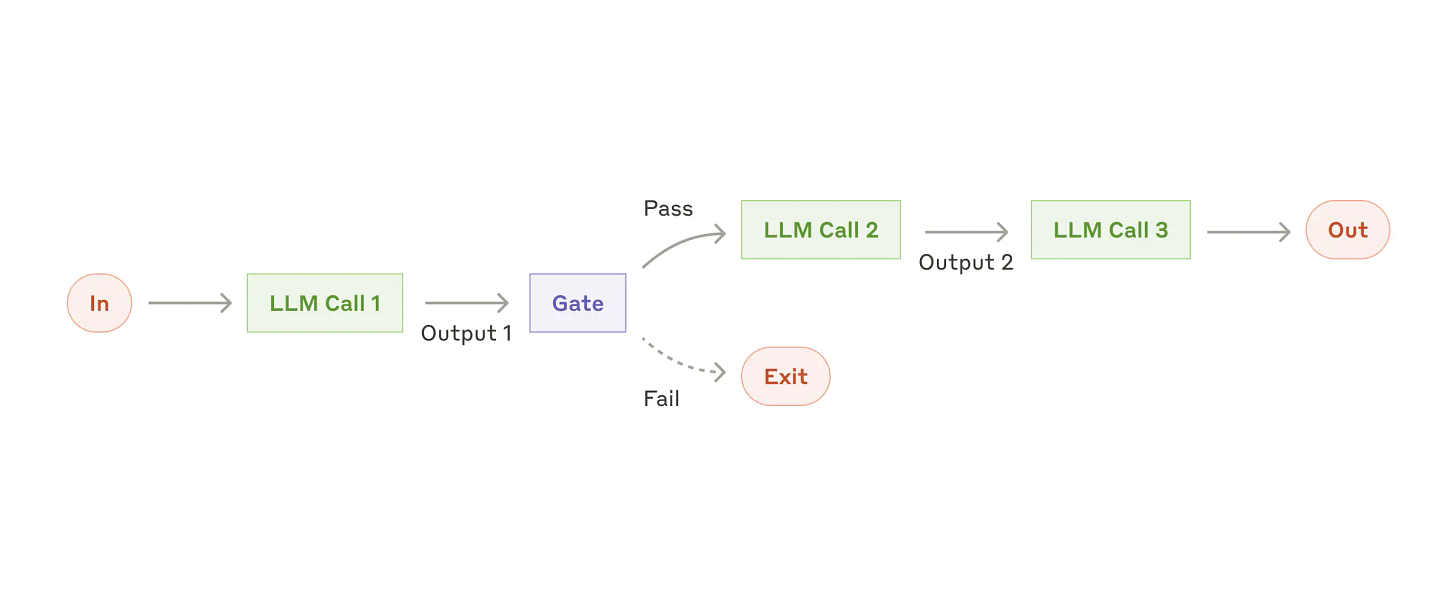

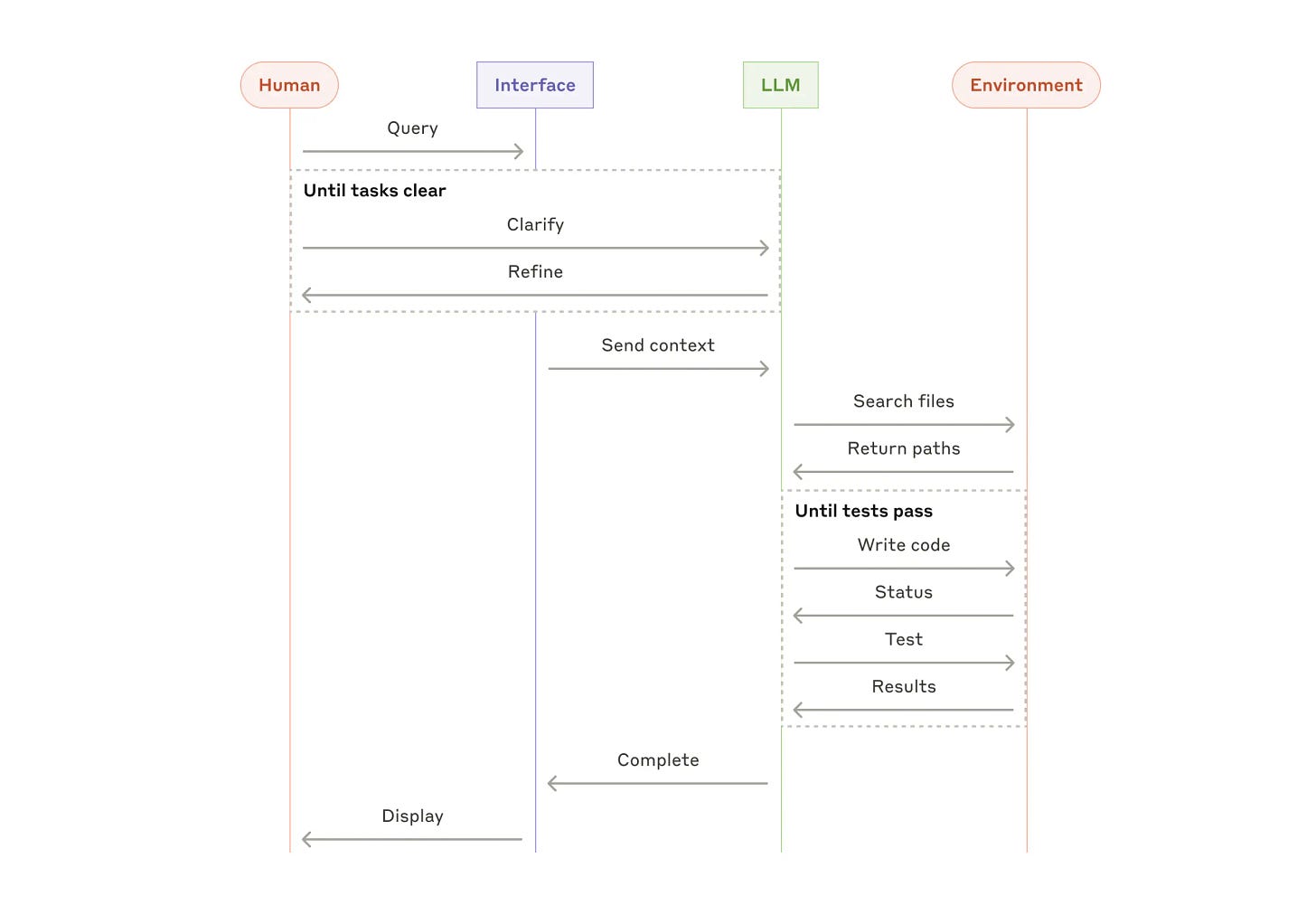

5. Handle Uncertainty Like a Pro

Agents will face uncertain situations. And when they don’t know something?

They should:

Ask clarifying questions.

Defer to a human.

Log the incident for future training.

Don’t pretend your agent is infallible.

Design it to admit when it needs help users actually trust that more.

Example Best Practices:

If missing critical data?

Ask the userIf confidence is low?

Summarize options, don’t decide alone.If risk is high?

Escalate to a human.

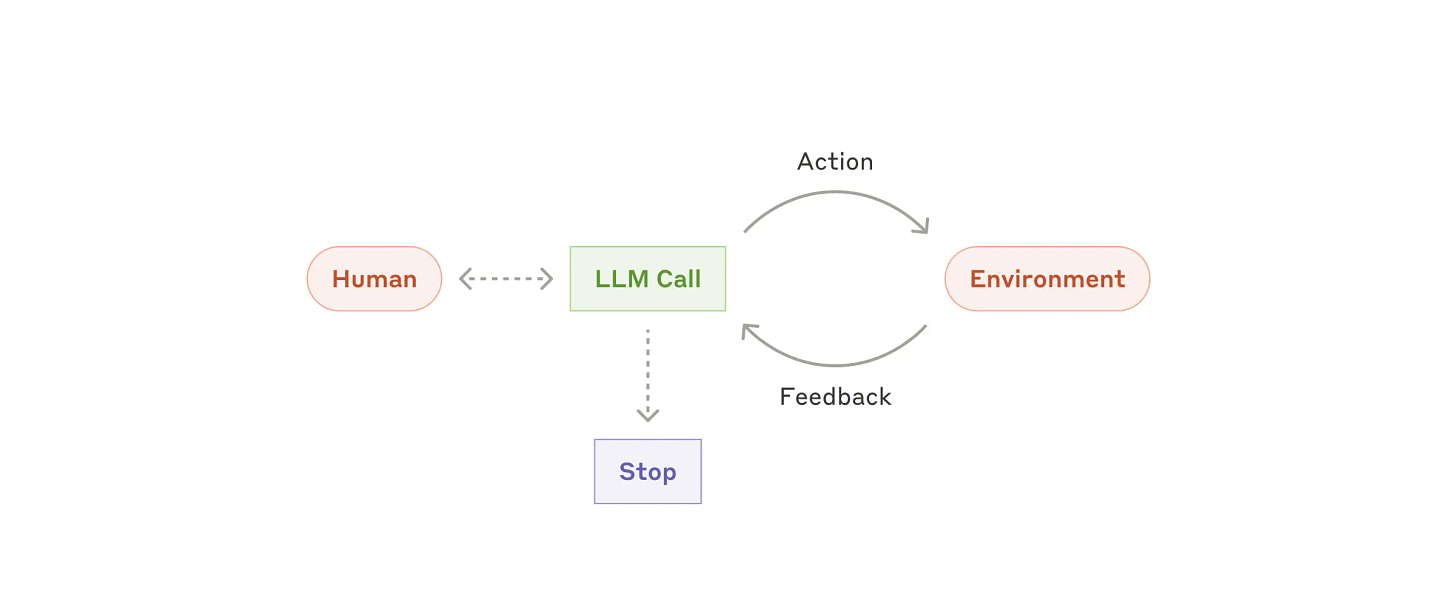

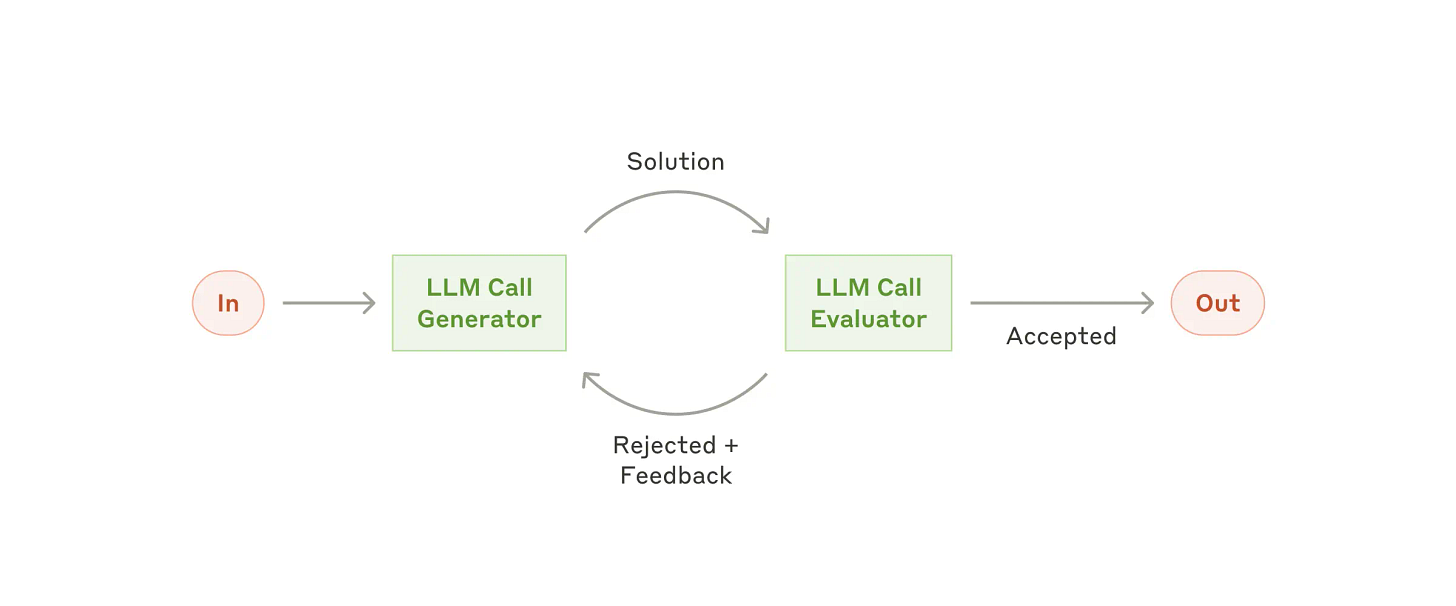

6. Use Evaluation Loops and Feedback

You can’t build once and forget it.

Great agents constantly evolve because builders measure and improve them.

Key Evaluation Strategies:

Human-in-the-loop validation (sample conversations, outcomes)

Task completion rate

User satisfaction feedback

Fine-grained success/failure labels at each action point

Pro tip:

Don’t just measure "Did it answer?"

Measure "Did it accomplish the goal?"

7. Always Prioritize Safety and Ethics

Especially in 2025, users and companies care deeply about:

Privacy: What data does the agent see/store/share?

Security: How are API keys, user info, and files handled?

Bias/Fairness: Is the agent treating different users equally?

Abuse Prevention: Can users exploit the agent to harm others?

Embed guardrails early:

Input filters

Output validation

Safety classifiers

Manual reviews on sensitive actions

⚡ If you want to read the main article, we got you covered:

If you liked this post of AI Agents Simplified, share it with your friends and spread the knowledge! ❣️

What a simplification!!

Great post, thank you for sharing! I appreciate you including the ethical aspects! 😊