#23 - Your AI Agent Can Run +30 Hours on a Single Task!

Your next coworker doesn’t sleep, forget, or need coffee 👀

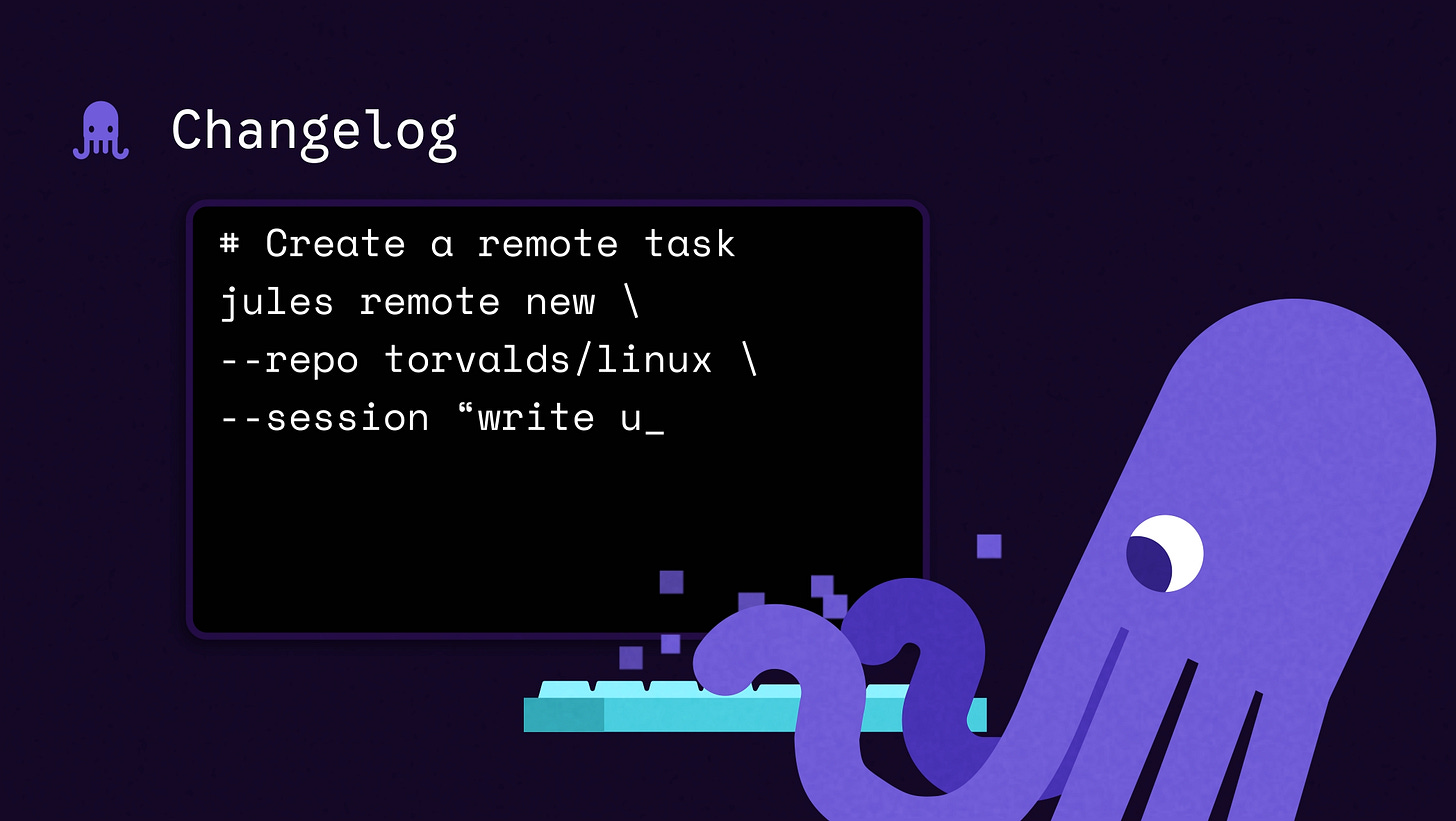

Google’s Jules Moves Into the Terminal

Google’s coding assistant Jules is taking a big step closer to developers’ actual workflows. With the new Jules Tools command-line interface and an upcoming Jules API, Google is clearly done treating Jules like a chat toy, it’s turning it into a real teammate that lives in your terminal.

Now you can start, stop, or verify coding tasks right beside your own commands, and soon, hook Jules into other systems, triggering it from Slack, your CI/CD pipeline, or wherever you already build. That’s a smart move. Instead of chasing the sci-fi dream of fully autonomous coders, Google is quietly making Jules practical, predictable, and close to the ground :)

It’s also been tuned up under the hood: better reliability, lower latency, smarter file handling, and even a bit of memory for remembering your preferences. Google isn’t shouting about “AGI devs” it’s just making Jules more useful for the people who actually build things. That’s probably the right kind of progress.

Claude Sonnet 4.5 Pushes the Limits of Agent Endurance

Anthropic’s new Claude Sonnet 4.5 dropped, and the company is calling it a major step forward for agentic AI. The model can apparently run autonomously for up to 30 hours on a single task!! like building an app from scratch or managing a long, multi-step project.

That’s ambitious, but it’s also experimental. We’re still very much in the “how far can we push this before it breaks?” phase. It’s a bit like training a tireless intern who’s brilliant at pattern-matching but still occasionally forgets what room it’s in :)

What’s interesting, though, is Anthropic’s focus on stability rather than showmanship. While everyone else is busy launching agent frameworks and flashy demos, Claude is being stress-tested for reliability and that’s exactly what’s needed if we ever want agents that can actually work unsupervised for more than an hour.

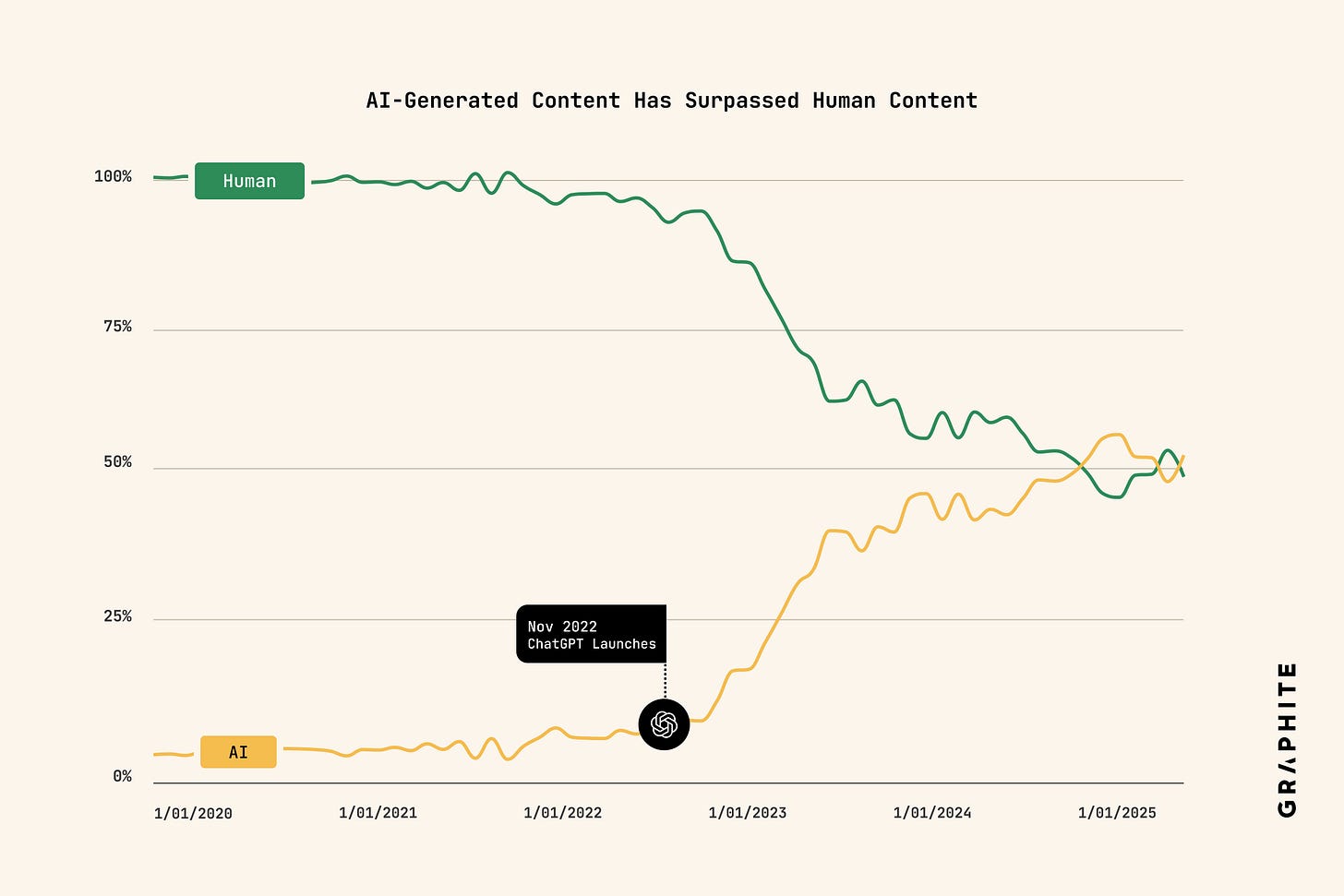

AI Outwrites Humans, But Does Anyone Care?

A new study found that since late 2024, AI-generated articles have officially surpassed human-written ones on the open web. On paper, that sounds like the robots have taken over! in practice, it’s more like they’ve flooded the internet with filler.

The researchers analyzed 65,000 English-language articles and found about 39% were AI-written, a number that’s flattened since last year. The likely reason is that these AI posts don’t perform well in search. Turns out, Google’s algorithms and human readers aren’t all that interested in content that feels synthetic, even when it’s grammatically perfect.

It’s an ironic twist: we trained AI to write like us, and then ignored it once it did. The real opportunity now isn’t churning out more words, it’s finding ways to use AI to sound more distinctly human. Those who figure that out will win the next round of the content game :)

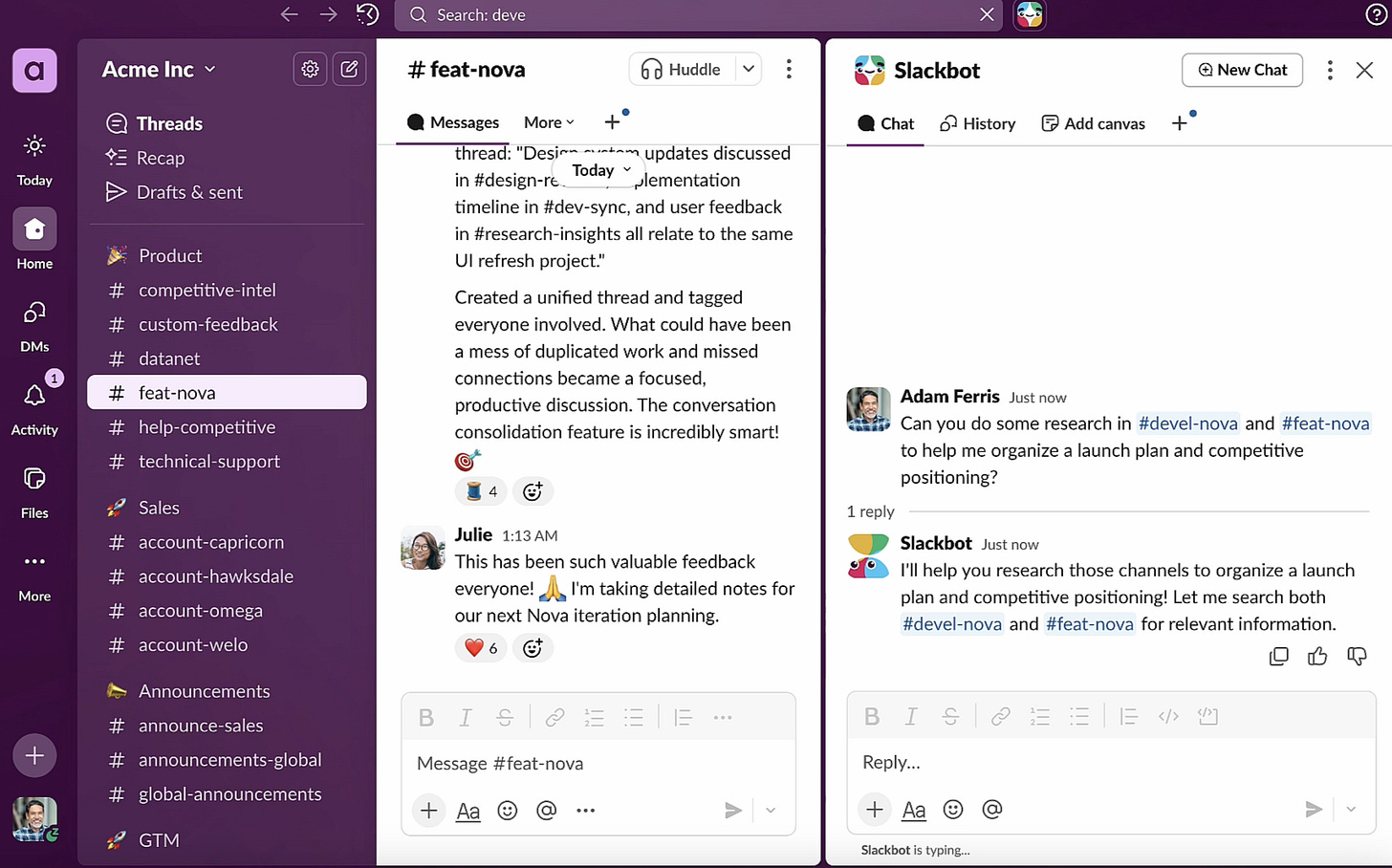

Slackbot Learns to Actually Help

Slack is giving its long-suffering mascot, Slackbot, a full AI makeover. Once limited to reminders and emoji replies, it’s now becoming a genuine assistant that can plan projects, find documents, summarize threads, and even coordinate meetings through Outlook or Google Calendar.

The new Slackbot sits next to the search bar, opening into a side panel where you can just type something like “Find the doc Jay shared in our last meeting” or “What are my top priorities today?” It pulls context from your conversations and files to answer in plain English.

It’s an understated but meaningful update. Instead of launching a whole new AI product, Slack is rebuilding something users already know, just making it a lot smarter. That’s the kind of integration that sticks, because it doesn’t ask anyone to change habits. Within a year, Slackbot might quietly become the AI coworker everyone uses without even realizing it.

🔮 Cool AI Tools & Repositories

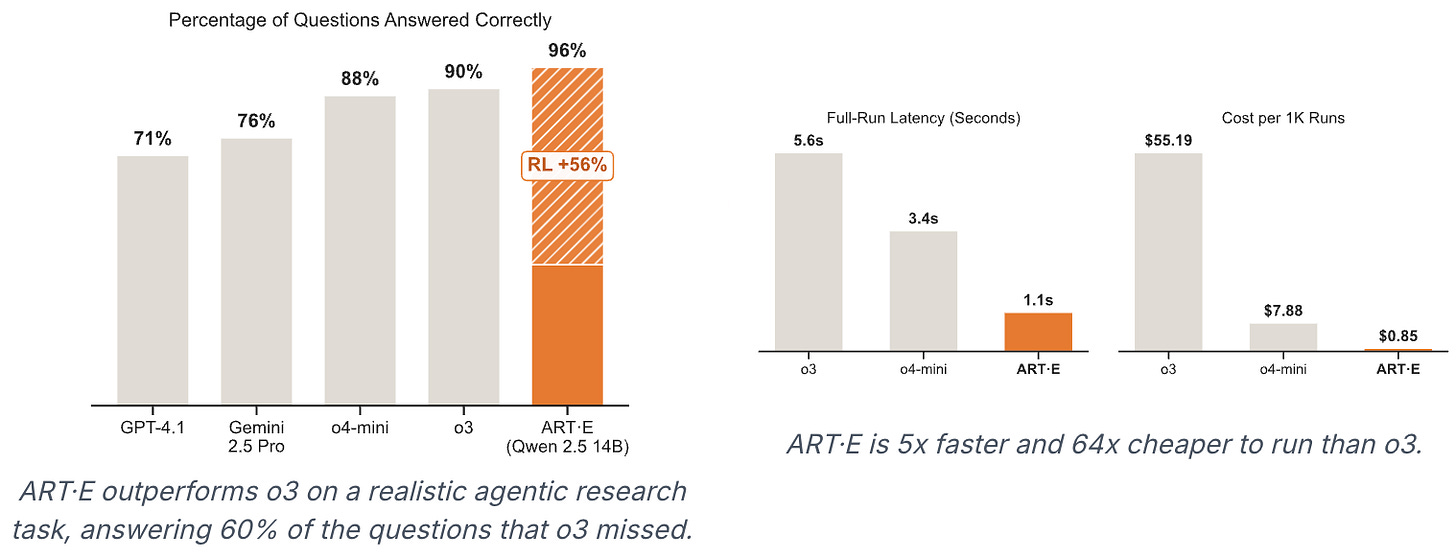

Agent Reinforcement Trainer: Agent Reinforcement Trainer: train multi-step agents for real-world tasks using GRPO. Give your agents on-the-job training. Reinforcement learning for Qwen2.5, Qwen3, Llama, Kimi, and more!

dyad: Dyad is a local, open-source AI app builder. It’s fast, private and fully under your control

VideoLingo: Netflix-level subtitle cutting, translation, alignment, and even dubbing

📃 Paper of the Week

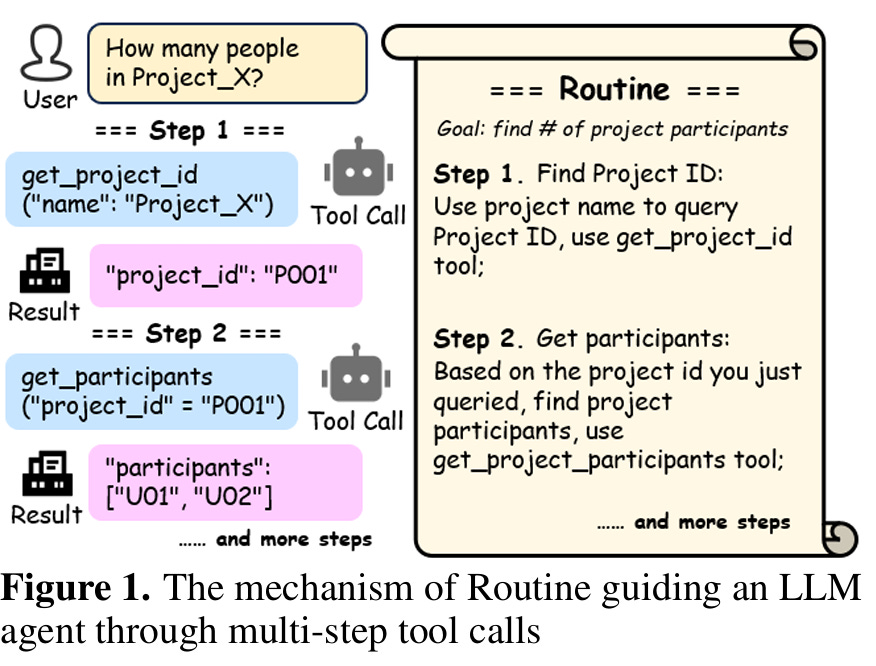

Routine: A Structural Planning Framework for LLM Agent System in Enterprise

This week’s pick is “Routine: A Structural Planning Framework for LLM Agent System in Enterprise” by Guancheng Zeng et al. It introduces Routine, a practical and well-structured planning framework that helps LLM-based agents perform better in enterprise settings. The main goal here is to bring more stability, precision, and domain awareness to multi-step agent workflows especially in real-world scenarios like HR automation. It’s one of the first frameworks that cleanly bridges LLM reasoning with reliable execution in messy, tool-heavy environments.

Here’s what makes it stand out:

Structured Planning Format: Routine defines a clean, multi-step planning structure using standardized JSON. This helps ensure consistent tool calls, better parameter handoff, and more stable task execution across agent runs.

Solving Enterprise-Specific Pain Points: It targets classic enterprise challenges like injecting domain-specific knowledge into agents and improving tool coordination by generating context-aware plans called Routines. This leads to much higher accuracy, especially in complex flows like HR operations.

LLM-Guided Plan Optimization: Draft plans are first created using expert-annotated prompts, then optimized by a specialized LLM to produce detailed, executable toolchains. It’s a hybrid setup where LLMs do the reasoning and decomposition, but with structured guidance.

Lightweight Execution Models: Instead of relying on heavyweight LLMs at every step, Routine uses small instruction-following models for tool execution, reducing compute cost while maintaining accuracy. It also integrates a summarization module to wrap up multi-step outputs cleanly.

Scenario-Specific Training + Distillation: The team created a Routine-compatible dataset tailored to multi-tool, domain-specific workflows. Fine-tuning models like Qwen3-14B on this dataset resulted in massive gains around 50% accuracy improvement thanks to the distillation and structure Routine enforces.

Overall, Routine is a big step toward making LLM agents actually useful in structured enterprise environments it gives us a clear blueprint for going from fuzzy prompts to reliable, modular execution with tools.

💌 A Little Note from Us

Thanks for continuing to support the growth of this newsletter and for being patient while waiting for new issues…

From now on, you won’t have to wait long for the latest updates from the world of AI Agents. We’re putting in our best effort to bring you high-quality, engaging content every week in a way that feels fresh and fun to read :)

Big things are coming for AI Agents Simplified. If you’d like to sponsor upcoming editions or collaborate in any way, you can reach us through our page.

Cheers 🍻