#14 – Build Your AI Agent Locally, Efficiently, and 78% Cheaper!

From Local Stacks to Smarter Chats! Dive into cost-cutting setups, agent protocols, and how AI agents really talk 🤝

Welcome to the 14th issue of AI Agents Simplified! 🎉

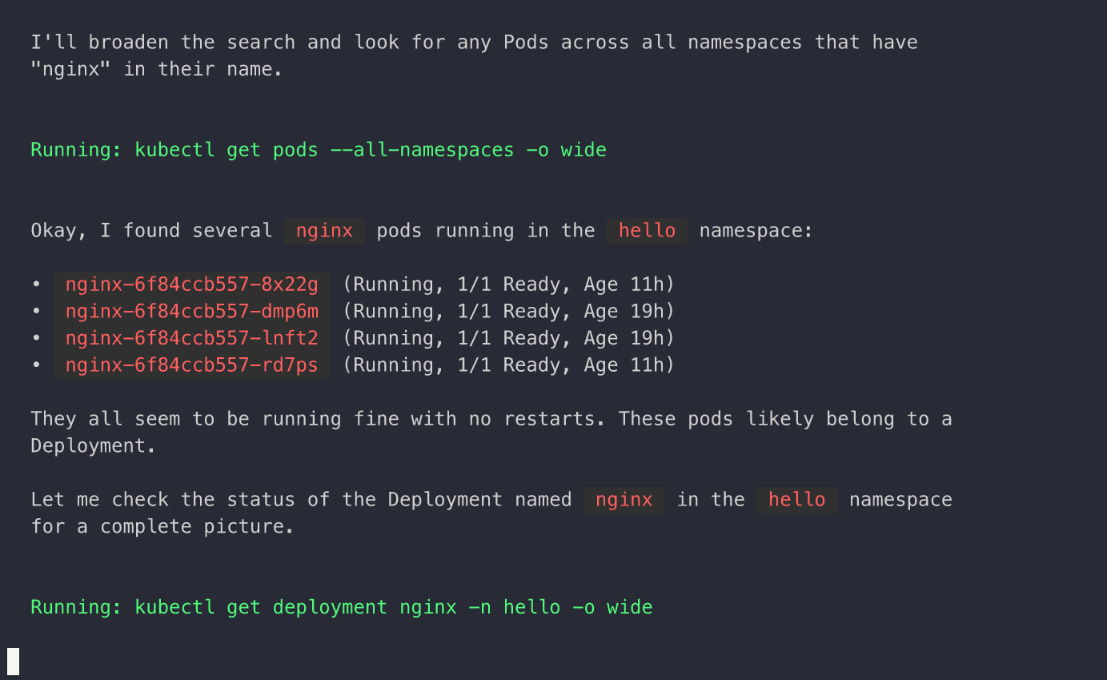

Build Your Own AI Automation Stack (Locally on Windows)

Want full control over your AI workflows with zero API limits or token fees? In this step-by-step guide, we walk you through setting up a fully local AI automation stack using Docker, n8n, and LM Studio (yes, on Windows).

We cover everything from hardware recommendations to installing DeepSeek models in LM Studio, connecting it to n8n, and running your own local agents, no internet required.

Self-host your automations. Own your data. Scale on your terms.

How Do AI Agents Actually Talk to Each Other?

And why does it matter so much?

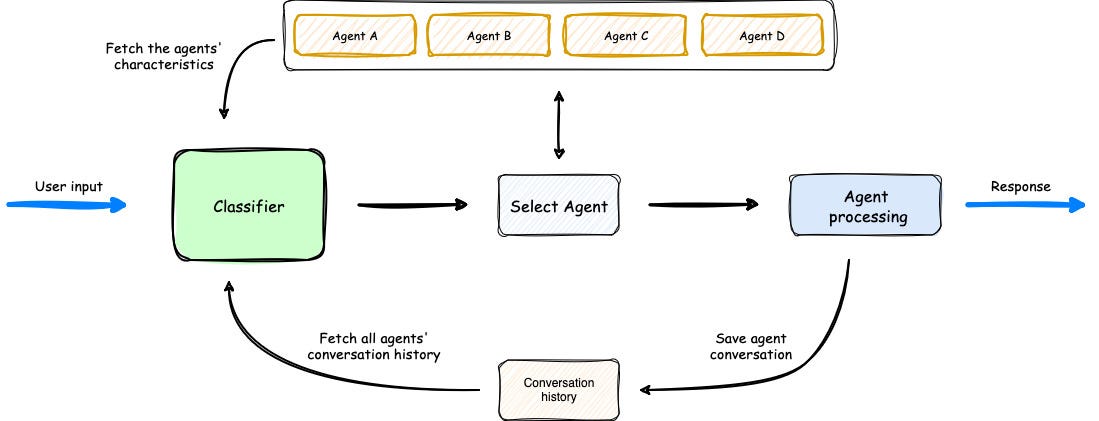

In this deep dive, we break down one of the biggest blockers in building scalable, multi-agent systems: no shared language between agents.

If you’re serious about LLM agents, you need to understand agent protocols, how agents communicate with tools, with each other, and with external APIs.

This post is based on a detailed survey by researchers at Shanghai Jiao Tong University + ANP, covering:

A clean 2D classification of agent protocols (context-based vs. inter-agent, general vs. domain-specific)

Real-world use case: planning a 5-day trip across agents

7 key performance metrics for evaluating any protocol

A future vision: evolvable, layered, privacy-aware agent networks

If TCP/IP connected the internet, agent protocols will connect intelligent systems. Time to learn how they work.

How AI Agents Chat? And Why It Matters?

As large language models (LLMs) keep changing industries from customer support to healthcare, the emergence of LLM-based agents is opening a new chapter in AI. These agents can make decisions on their own, reason through problems, and use external tools, which is changing how we handle complex tasks. But there’s a big problem holding things back: there’…

Build Your AI Agent 78% Cheaper? Yes, Really.

Smart LLM routing = serious savings.

If you're running LLM-based agents, the biggest hidden cost isn't in building it's in using them.

Most people send every prompt to GPT-4. Even for tasks like "What's the weather today?" That’s expensive overkill.

In this post, we break down one of the smartest (and most underused) techniques in the AI stack: dynamic model selection.

What you’ll learn:

Why model routing matters for scale (especially for startups + indie builders)

5 ways to cut LLM costs without sacrificing quality

How reinforcement learning and bandits help agents pick the right model

A blueprint to build your own routing system

TL;DR: Not every task needs a supercomputer. Let your agent think before it spends.

Build Your AI Agent 78% Cheaper!

AI agents are changing the game of automating tasks, scaling support, and powering new products. But if you've played with LLMs long enough, you already know the biggest problem: cost.

📰 What Happened in the Last Week?

Apple x Anthropic? AI Coding Comes to Xcode

Apple is quietly testing something big under the hood.

According to Bloomberg, Apple is teaming up with Anthropic to bring Claude Sonnet to Xcode their flagship code editor. The idea? An AI assistant that can write, edit, and test code inside your dev environment.

For now, it’s only rolling out internally, and Apple hasn’t said when (or if) we’ll see a public release. But here’s what the tool can reportedly do:

Handle requests through a chat-like interface

Auto-generate and test UIs

Help squash bugs faster

In short, it's aiming to be Copilot, but for Apple devs.

This comes after Apple’s slow progress on AI compared to peers like Microsoft and OpenAI. Remember “Swift Assist” announced at WWDC 2024? Still MIA. And Siri’s big upgrade? Delayed. Again.

Meanwhile, OpenAI is rumored to be in talks for a $3B acquisition of Windsurf, an AI coding startup, and Microsoft says 20–30% of code in some internal projects is already AI-generated.

So while Apple’s been late to the AI party, this Claude-powered Xcode integration could be their way of playing catch-up fast.

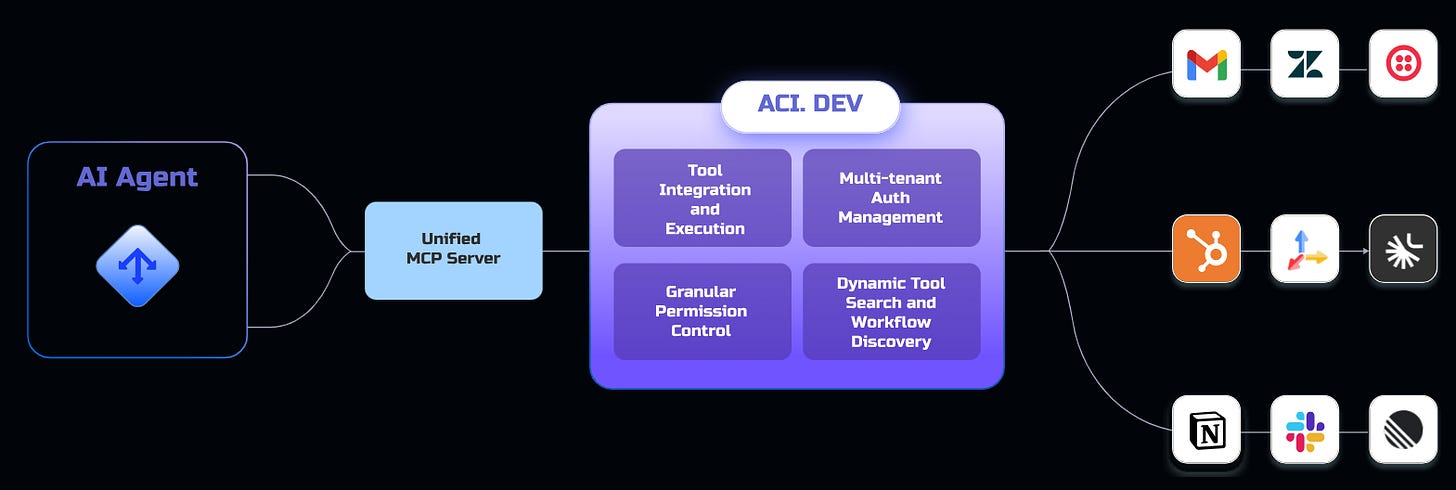

🔮 Cool AI Tools & Repositories

📃 Paper of the Week

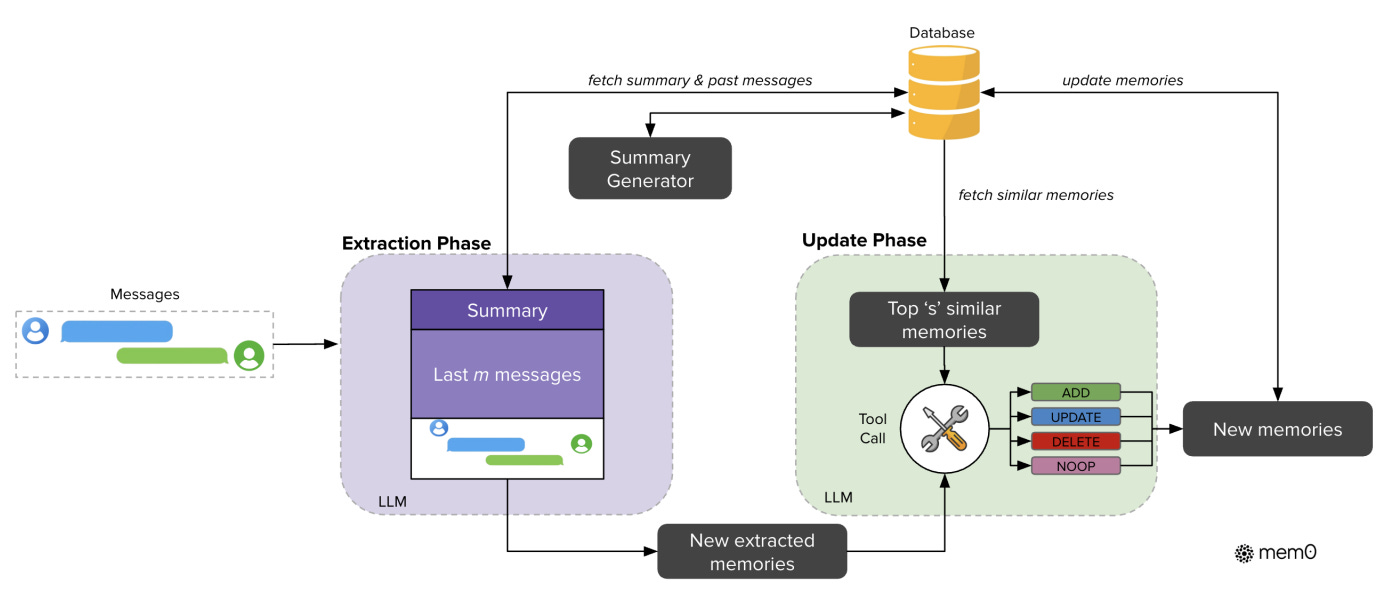

Mem0: Building Production-Ready AI Agents with Scalable Long-Term Memory

If you’re serious about building production-ready AI agents, you’ve probably hit the memory wall. LLMs forget things fast, and stuffing every convo into the context window just isn’t scalable.

That’s where Mem0 comes in. This new memory system extracts, updates, and retrieves only what matters keeping your agent sharp and consistent without blowing through tokens.

It even comes with a graph-based version (Mem0^g) for structured memory (think: entity relationships and reasoning over time).

Highlights:

Two-phase memory pipeline: extract (via LLM) to update (ADD/UPDATE/DELETE).

Graph version (Mem0^g) stores memory as labeled triplets (e.g., Alice LIVES_IN SF).

Outperforms baselines like MemGPT and OpenAI’s memory system in the LOCOMO benchmark.

Reduces token usage by 90%+ and cuts latency from 17s to 1.4s.

Better performance in temporal and multi-hop reasoning with fewer compute demands.

In short: state-of-the-art performance + real-world efficiency = ready for production.